Balancing Privacy and Progress When Sharing Real-World Imaging Data

Reading time /

4 min

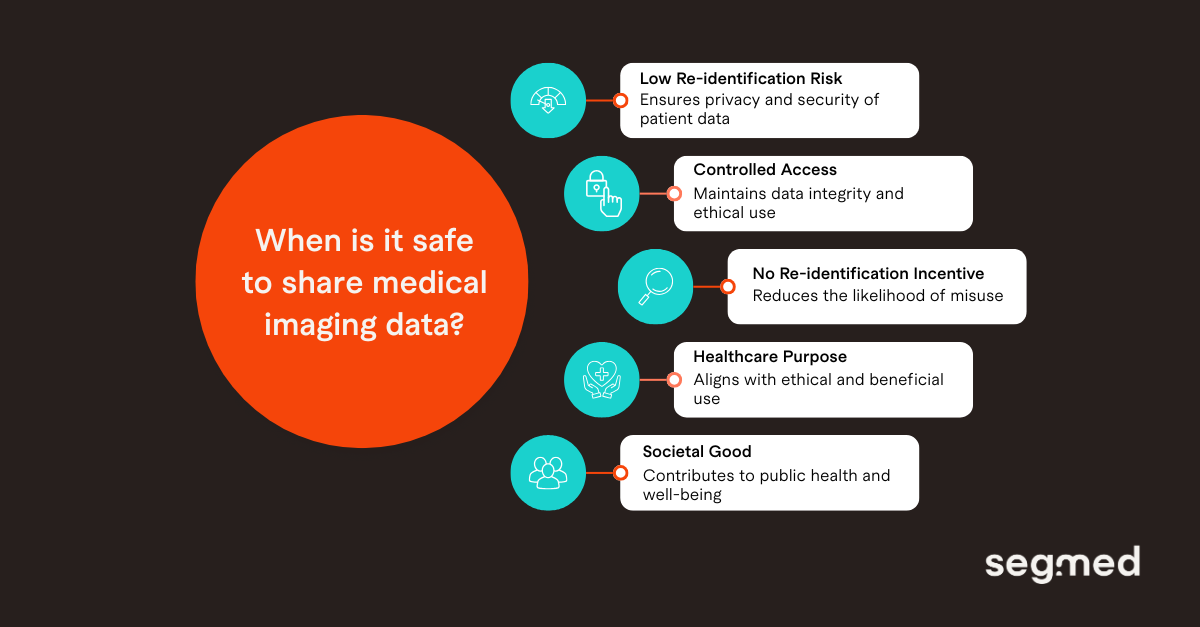

Industry

Real‑World Imaging Data (RWiD) is increasingly being shared and used in research. It is valuable since it allows for new insights into patient care and outcome. But, it also comes with significant responsibilities. Its sensitivity necessitates strict security and privacy measures to safeguard patient information. When sharing multimodal RWiD, the main goal is to protect patient privacy, maintain data integrity, meet regulatory requirements, and promote the ethical use of health data to support care and advance medical research. The answer lies in striking the right balance, keeping privacy and compliance strong, while leaving room for innovation.

Does Imaging Data Demand Additional Privacy Protection?

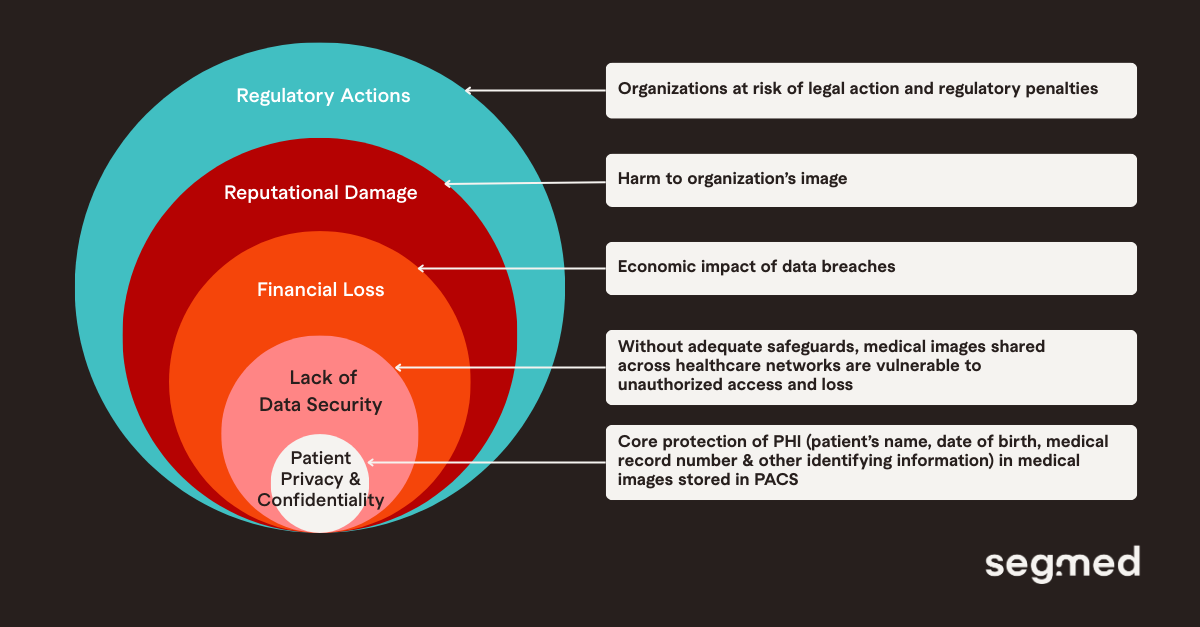

Imaging data includes standard protected health information (PHI) such as a patient’s name, date of birth, medical record number, and other identifiable details, but medical images also contain metadata which can include acquisition parameters, device identifiers, and embedded demographics. The information stored in this metadata in DICOM files and other imaging formats can reveal patient information if not de-identified effectively. Along with this, device-specific artifacts may reveal acquisition sites or equipment models, radiologist annotations may include free-text clinical impressions, and images typically contain a wealth of clinical data, including biomarker information. All of this highlights the need for further privacy protections and makes medical images particularly sensitive. Therefore, privacy protections require both image-level (pixel) de-identification and metadata scrubbing.

Another concern is the impact on the organization, from regulatory penalties and legal liabilities to reputational damage and loss of patient trust, if a data or security breach occurs.

What Are Some Privacy-preserving Techniques Applied in Medical Imaging?

Medical imaging uses various privacy protection technologies to protect patients’ privacy and their sensitive information. In cases where data owners, such as healthcare centers, need to share their data with trusted parties, multiparty data-sharing mechanisms may be necessary.

1. Data Encryption

Encrypting data is a primary method for protecting medical images during transmission or storage by ensuring only the rightful data holder can view and interpret it. Symmetric encryption is fast and efficient for large medical datasets but requires extensive key management. Asymmetric encryption uses a public key for encryption and a private key for decryption, offering more security but being more computationally intensive. Homomorphic encryption allows computations directly on encrypted data, allowing private processing even during AI model training.

2. De-identification and Anonymization

De-identification and anonymization are the most widely used methods to protect patient privacy in medical image sharing and processing. De-identification removes personally identifiable information such as patient name, ID numbers and metadata like date of birth. De-identification may still allow re-identification through the healthcare provider. For example, when a patient undergoes a follow-up exam, this can be linked to the previous exams of the de-identified patient, but only the healthcare provider has access to the identifiable information. Anonymization on the other hand, does not allow for re-identification, by eliminating direct and indirect identifiers that could link someone to another dataset. However, anonymization is not a perfect solution, as it for example does not allow for adding follow-up exams.

Advanced tools are required for effective de-identification, such as optical character recognition (OCR) for image content and systematic removal or masking of Digital Imaging and Communications in Medicine (DICOM) tags that are also PHI in the metadata. Data de-identification tools have achieved de-identification success rates of more than 90% for DICOMs.

3. Differential Privacy (DP)

DP increases data protection by adding random noise to datasets or model training updates. That makes individual patient contributions statistically indistinguishable and nearly impossible to trace back. It allows organizations to extract value from sensitive medical data while preserving aggregate utility. DP works especially well with complementary methods like encryption and federated learning to further reduce privacy risk. Though, too much noise can affect accuracy and precision, so careful calibration is needed to balance privacy protection with data usefulness.

4. Access Controls

This includes implementing role based policies and procedures to control who has access to medical images and data. Access control can include measures, such as authentication (verifying a person’s identity before granting access to data) and authorization (only authorized personnel have access to data.

5. Image Deformation in Medical Imaging

Medical image deformation can be used to protect patient privacy by deforming identifiable features (such as facial features in tomographic images) to prevent the image from being traced to a specific individual. It should be considered that while image deformation can help protect the privacy of patients in medical imaging, it can also reduce the diagnostic value of images when retrieving the original image from deformation.

Which Are Some Data Protection Laws and Regulations to Ensure Patient Privacy?

Robust data governance is crucial for using imaging data in secondary research while protecting patient privacy. Today's governance frameworks follow principles similar to those applied to other health data such as de-identification, secure storage, and opt-in or opt-out mechanisms that give patients control over how their data is used. The availability of these options depends on local regulations and provider standards. De-identification is now a standard step before sharing data, although it brings unique challenges for imaging data.

Data Protection laws and regulations such as the Health Insurance Portability and Accountability Act (HIPAA) in the United States, General Data Protection Regulation (GDPR) in the European Union and the Personal Information Protection and Electronic Documents Act (PIPEDA) in Canada. HIPAA defines which pieces of information are considered potential patient identifiers, or PHI, and applies specifically to health information handled by covered entities. In contrast, GDPR covers a broader range of personal information and emphasizes explicit consent and the right to erasure (“right to be forgotten”). PIPEDA focuses on consent-based use and applies to both health and non-health personal information within Canada’s private sector.

These laws and regulations also require organizations to report data breaches, ensuring that both individuals and authorities are informed if personal data is compromised. Though, how well these frameworks work can differ widely based on the jurisdiction where they are implemented.

How Segmed Supports Privacy-focused RWiD Sharing

At Segmed, we recognize the importance of meeting regulatory and privacy requirements while assuring the ethical healthcare information. We provide access to over 150 million regulatory-grade, de-identified, multimodal imaging datasets that meet the highest regulatory requirements. Our organization has put in place secure data de-identification systems to protect patient information while maximizing RWiD utility in foundation model training. In addition to this, we provide centralized datasets which are harmonized, standardized as it will allow faster model development and easier regulatory alignment. We are HIPAA compliant, both through Safe Harbor as well as through Expert Determination. Additionally, Segmed also has maintained SOC 2 Type 2 and ISO 27001 certifications for several years, reflecting a sustained investment in security, governance, and operational rigor. These certifications cover our information security management systems and internal controls, and are regularly audited to ensure ongoing compliance with industry best practices.

We collaborate with our partners to ensure that our datasets are ethically sourced, unbiased, and representative of diverse patient populations. By strictly adhering to regulatory guidelines, our RWiD datasets help train and fine-tune foundation models. These RWiD-driven models provide enhanced diagnostic accuracy, generalizability across populations, and improved robustness to varying clinical settings.

Our regulatory-grade, de-identified, and annotated datasets are ideal for developing AI models across oncology, neurology, and cardiology disease areas. Segmed has been part of more than 35 FDA clearances, multiple foundation models, and fit-for-purpose real-world evidence research projects.

Connect with us to explore how our diverse, high-quality tokenized imaging datasets can enhance the training and validation of healthcare AI models.

Frequented Asked Questions - F.A.Q.

What makes real-world imaging data more sensitive than other health data?

Real-world imaging data is more sensitive because it includes both image pixels and embedded DICOM (Digital Imaging and Communications in Medicine) metadata that can contain identifiable patient, device, or institution information. When combined, these elements increase re-identification risk compared to structured health records alone.

How is DICOM metadata de-identified?

DICOM metadata is de-identified by removing or masking direct identifiers, generalizing dates, and anonymizing institution and device information in accordance with regulatory standards. Automated validation ensures no identifiable data remains in standard or private tags.

What regulations govern the sharing of RWiD?

The sharing of real-world imaging data is governed by multiple regional and international regulations, including:

- HIPAA (U.S.), which defines standards for de-identification and permissible data use

- GDPR (EU), which emphasizes lawful processing, data minimization, and purpose limitation

- Local health data protection laws and ethical review requirements

In addition to legal frameworks, data sharing often requires compliance with institutional review board (IRB) approvals, data use agreements (DUAs), and ethical AI principles, particularly when imaging data is used for research, AI development, or regulatory submissions.

How does Segmed ensure data privacy and compliance?

Segmed ensures data privacy and regulatory compliance through a privacy-by-design approach across the entire imaging data lifecycle. This includes sourcing data only through authorized healthcare partners, applying standardized and validated de-identification pipelines, and enforcing strict access controls and audit trails. Segmed’s processes are aligned with global data protection regulations and supported by governance frameworks that emphasize transparency, accountability, and risk mitigation. Continuous monitoring, documentation, and collaboration with data providers help maintain compliance as regulatory expectations evolve.

Can de-identified imaging data still be used for AI model training?

Yes, properly de-identified imaging data retains clinical features needed for AI training while protecting patient privacy. This enables the development of generalizable and compliant medical imaging AI models.

References

- de Araujo AL, Wu J, Harvey H, Lungren MP, Graham M, Leiner T, Willemink MJ. Medical imaging data calls for a thoughtful and collaborative approach to data governance. PLOS Digit Health. 2025 Oct 28;4(10):e0001046. doi:10.1371/journal.pdig.0001046. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC12561909/

- Gaudio A, Köhler T, et al. PRIMIS: Privacy-preserving medical image sharing via deep sparsifying transform learning with obfuscation. Comput Biol Med. 2024;171:107406. doi:10.1016/j.jbi.2024.104583. Available from: https://www.sciencedirect.com/science/article/pii/S1532046424000017

- Zhu Y, Yin X, Liew AW-C, Tian H. Privacy-preserving in medical image analysis: a review of methods and applications [preprint]. arXiv [Internet]. 2024 Dec 5 [cited 2025 Dec 31]. Available from: https://arxiv.org/abs/2412.03924

- Decentriq AG. Secure medical data collaboration: making progress without risking privacy [Internet]. Zürich (CH): Decentriq AG; 2025 Sep 30 [cited 2025 Dec 31]. Available from: https://www.decentriq.com/article/secure-medical-data-collaboration#methods-and-strategies-for-secure-healthcare-data-collaboration

- Enlitic. PHI data protection and security [Internet]. Loveland (CO): Enlitic; 2023 May 17 [cited 2025 Dec 31]. Available from: https://enlitic.com/blogs/phi-data-protection/