Multimodal Data Pipelines: The New Gold Standard in Pharma Research

Reading time /

3 min

Industry

.png)

In the current era, the pharmaceutical industry has largely embraced the "Multimodal Dataset". R&D teams are successfully integrating genomics, transcriptomics, and Electronic Health Records (EHR) to build longitudinal patient views. This shift toward integrated evidence is driven by the realization that biology is too complex for unimodal analysis. This has made multimodal datasets not just desirable, but essential.

What makes multimodal pipelines the new gold standard is not volume alone, it is coherence. When data sources are systematically linked, time aligned, and governed under consistent quality and compliance frameworks, they enable answering research questions that were previously impossible to answer. Questions about patient heterogeneity, real world treatment effectiveness, and disease evolution across care pathways are now becoming accessible.

However, a significant gap remains: medical imaging is the most data-rich phenotypic record and is still being treated as an isolated attachment rather than a primary, AI-ready modality. While many pipelines are multimodal in name, the imaging component is often unstructured and siloed in legacy PACS systems. Such multimodal datasets often lack the robust de-identification required for global R&D. To reach the next frontier of drug discovery, imaging must be elevated from a supporting scan to a regulatory-grade ground truth.

From Fragmented Data to End-to-End Pipelines

Pharma research historically treated different data types as separate islands. Clinical labs lived apart from imaging archives, genomics platforms rarely talked to real-world evidence sources, and text-based records were processed independently from structured data. The result? Fragmented insights, slower discovery, and analytic blind spots.

Why multimodal pipelines make more sense now:

- Holistic view: Combining clinical, molecular, text, and image data replicates how clinicians diagnose and treat diseases not one data type at a time.

- Improved accuracy: Multimodal datasets models outperform unimodal datasets approaches in predictive tasks because they integrate complementary signals.

But multimodal dataset value is not realized simply by aggregating data. It depends on intentional pipeline architecture where interoperable ingestion, harmonization, fusion, AI/ML-ready formatting, and downstream analytics are baked in from the start. This means designing pipelines that:

- Support research questions (e.g., target discovery, patient stratification)

- Enable regulatory readiness (FAIR data, traceability)

- Catalyze commercial insights (biomarker validation, real-world evidence modeling)

Why End-to-End Multimodal Design Matters

End-to-end multimodal design matters because it enables joint learning across imaging and clinical data, capturing complex interactions that siloed approaches miss.

Imaging Data as Phenotypic Ground Truth in Multimodal Pipelines

Imaging is not just another modality, it is arguably the phenotypic ground truth in many therapeutic areas, especially in oncology (solid tumors), neurology, and cardiovascular medicine.

Medical images capture what the disease looks like in anatomy and function: from structural atrophy in the brain to tumor boundaries, vascular changes, and organ-level responses. When integrated with clinical, molecular, and genomic data, imaging provides a biological reference that grounds abstract biomarkers in observable disease features.

Evidence Snapshot

“In diseases like Alzheimer’s, imaging distinguishes biological truth from clinical approximation.”

Take Alzheimer’s disease as an example, clinical diagnosis based solely on cognitive symptoms is challenging; studies estimate that 20-30% of people are misdiagnosed in specialized care when neuroimaging and biomarkers aren’t used. Neuroimaging such as MRI and PET reveals hallmark changes in amyloid plaques, tau pathology, brain atrophy that are directly linked to disease mechanisms. These markers:

• Improve diagnostic precision,

• Enable earlier detection,

• Reduce patient misclassification,

• Inform both clinical and trial stratification.

In a multimodal dataset context, imaging serves as the anchor that contextualizes other modalities such as blood biomarkers, cognitive scores, genomic risk turning them into actionable insights rather than isolated signals. Without imaging, multimodal dataset models risk learning correlations not biology.

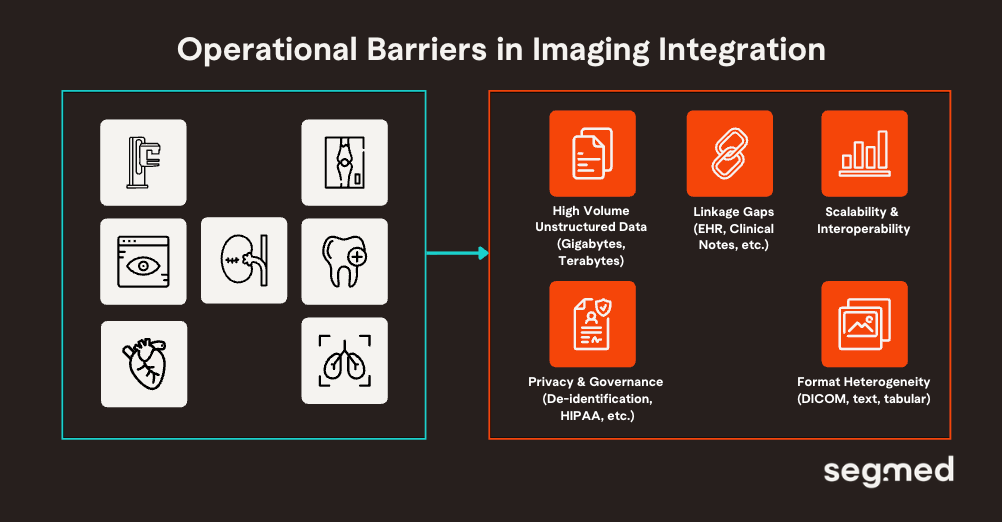

Imaging’s Central Role and Operational Complexity

Imaging offers high value, but it is structurally harder to operationalize at scale. The challenge with integrating imaging is not limited to relevance, but also extends to readiness. These constraints frequently stall multimodal pipeline adoption, relegating imaging to a secondary role despite its evidentiary value. Core challenges in imaging integration:

High Volume and Dimensionality

Longitudinal imaging generates gigabytes-terabytes data (e.g., 4D CT/MRI sequences), demanding efficient compression and processing unlike tabular EHRs. High-resolution voxels create curse-of-dimensionality issues, where parameters exceed samples, amplifying overfitting without augmentation.

Format Heterogeneity

Clinical images (e.g., DICOM) carry high-resolution pixel data and rich metadata that don’t fit neatly into EHR tables or text pipelines. Standardizing DICOM across sites and linking it to clinical records remains a persistent gap.

Unstructured Data Fusion

Connecting high-resolution image features to unstructured pathology reports, radiology text, and free-text clinical narratives requires advanced NLP and mapping methods that are not yet widely implemented.

Privacy & Governance Variability

Differing privacy policies across global hospital systems hinder consistent imaging aggregation for research. Federated learning and secure de-identification help, but remain technically and operationally complex.

Computational Demands

Imaging creates very large datasets. Managing storage, preprocessing, and AI/ML training pipelines for high-resolution data demands scalable compute and expert orchestration.

Evidence Snapshot

Imaging Is Valuable, but Structurally Harder

• What the literature shows: Multimodal integration reviews consistently identify imaging as the most information-dense yet operationally complex modality due to data size, standardization, and governance challenges.

• Implication: The bottleneck is not awareness of imaging’s value, but the infrastructure required to integrate it at scale.

As multimodal ambition grows across pharma research, the limiting factor is no longer awareness of imaging’s value; it is the ability to operationalize imaging alongside other modalities at scale despite the challenges.

Segmed is the Solution to Gaps in Multimodal Datasets

Segmed enables multimodal pipelines by making real-world imaging data research-ready, linkable, and scalable. Its platform brings together de-identified imaging studies with associated clinical, demographic, and outcomes data, allowing researchers to work with imaging not as a siloed asset, but as a first-class modality within multimodal research workflows.

By addressing foundational challenges including DICOM standardization, cohort curation, privacy-preserving data access, and longitudinal linkage. Segmed removes the friction that traditionally prevents imaging from being integrated early and meaningfully into pipelines. This enables multimodal datasets that are AI-ready, regulatory-defensible, and reflective of real-world patient populations, rather than narrowly constructed research cohorts.

Importantly, Segmed shifts multimodal pipelines from retrospective experimentation toward intentional, end-to-end design, where imaging informs discovery, supports validation, and strengthens real-world evidence generation across the product lifecycle. In doing so, Segmed helps close the gap between multimodal datasets theory and multimodal execution, ensuring imaging serves as the phenotypic backbone.

Our regulatory-grade, de-identified, and annotated datasets are ideal for developing AI models across oncology, neurology, and cardiology disease areas. Segmed has been part of more than 35 FDA clearances, multiple foundation models, and fit-for-purpose real-world evidence research projects.

Connect with us to explore how our diverse, high-quality tokenized imaging datasets can enhance the training and validation of healthcare AI models.

Frequented Asked Questions - F.A.Q.

What is a multimodal data pipeline in pharma research?

A multimodal data pipeline in pharma research integrates multiple real-world and clinical data types such as medical imaging, electronic health records, genomics, laboratory results, and outcomes data into a unified, analyzable framework. By aligning and harmonizing these data sources, the pipeline enables deeper insights into disease biology, treatment response, and real-world effectiveness, while supporting advanced analytics, biomarker discovery, and AI development.

Why is medical imaging critical in multimodal pipelines?

Medical imaging is critical in multimodal pipelines because it provides objective, spatially rich evidence of disease biology and treatment response that complements clinical, molecular, and outcomes data. Medical imaging serves as the anchor in multimodal analysis as it relates biological phenomena in the body to certain therapeutic agents and real outcomes.

Why are multimodal datasets becoming the gold standard for drug discovery?

Multimodal datasets in drug discovery are becoming the gold standard because they integrate different healthcare data into one place. Various healthcare data such as medical imaging, clinical records, molecular data, and outcomes altogether provide a much more complete view of the biology of disease and treatment response. This integrated perspective reduces uncertainty, improves target validation and patient stratification, and enables more predictive models across the drug development lifecycle.

What challenges prevent imaging from being fully integrated into multimodal pipelines?

Imaging can present difficulties in being integrated as part of multimodal pipelines because of variability in imaging protocols, image metadata, size, and lack of interoperability between imaging information and other healthcare data. Other complexities such as difficulty in annotations, de-identification, and requirements for standardized frameworks makes it challenging for integrating imaging data into a multimodal dataset.

How does Segmed enable imaging-first multimodal research?

Segmed provides large-scale, regulatory-grade real-world imaging data that can be connected to clinical, treatment, and outcomes information, thus enabling imaging-first multimodal research. With standardized ingestion, harmonization, quality controls, and privacy-preserving pipelines, imaging becomes a foundational modality for creating integrated real-world evidence to support AI, biomarker, and regulatory research.

References

- Hao Y, Cheng C, Li J, Li H, Di X, Zeng X, et al. Multimodal Integration in Health Care: Development With Applications in Disease Management. Journal of Medical Internet Research [Internet]. 2025 Jun 27 [cited 2026 Jan 16];27:e76557–7. Available from: https://www.jmir.org/2025/1/e76557

- Mani S, Lalani SR, Pammi M. Genomics and multiomics in the age of precision medicine. Pediatric Research [Internet]. 2025 Mar [cited 2026 Jan 16];97(4):1399–410. Available from: https://www.nature.com/articles/s41390-025-04021-0

- Simon BD, Ozyoruk KB, Gelikman DG, Harmon SA, Türkbey B. The future of multimodal artificial intelligence models for integrating imaging and clinical metadata: a narrative review. Diagnostic and Interventional Radiology [Internet]. 2024 Oct 2 [cited 2026 Jan 16]; Available from: https://dirjournal.org/articles/doi/dir.2024.242631

- Hansson O. Biomarkers for neurodegenerative diseases. Nat Med. 2021 Jun;27(6):954-963. doi: 10.1038/s41591-021-01382-x. Epub 2021 Jun 3. PMID: 34083813.

- Hyman BT, Phelps CH, Beach TG, Bigio EH, Cairns NJ, Carrillo MC, et al. National Institute on Aging–Alzheimer’s Association guidelines for the neuropathologic assessment of Alzheimer’s disease. Alzheimer’s & Dementia [Internet]. 2012 Jan;8(1):1–13. Available from: https://www.sciencedirect.com/science/article/pii/S1552526011029803

- Chapleau M, Iaccarino L, Soleimani-Meigooni D, Rabinovici GD. The Role of Amyloid PET in Imaging Neurodegenerative Disorders: A Review. Journal of Nuclear Medicine [Internet]. 2022 Jun [cited 2026 Jan 16];63(Supplement 1):13S19S. Available from: https://jnm.snmjournals.org/content/63/Supplement_1/13S