How Real-World Imaging Data Can Close the AI Validation Gap

Reading time /

3 min

Research

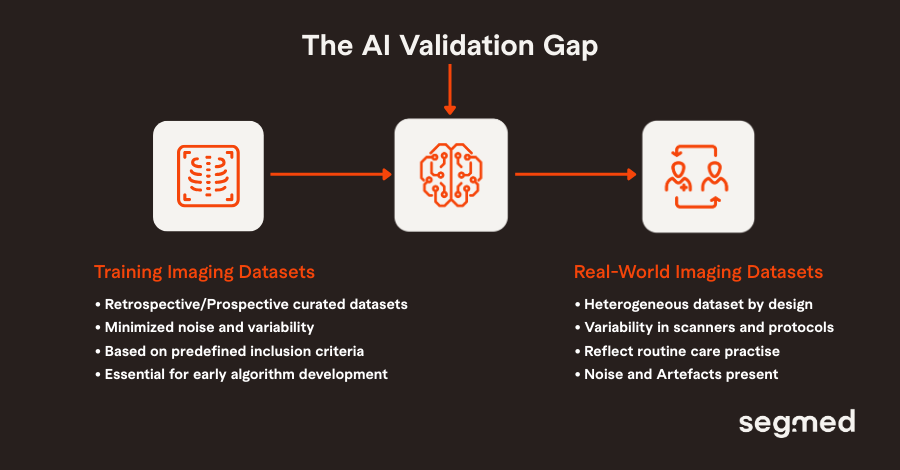

Artificial intelligence (AI) has demonstrated impressive performance across a range of medical imaging tasks, from lesion detection and segmentation to prognostication and treatment response assessment. However, most imaging AI systems are still trained and validated using limited and highly curated datasets. Such datasets are often drawn from a small number of institutions, scanners, and patient populations. While these datasets are essential throughout the algorithm development process, they rarely reflect the complexity, heterogeneity, and unpredictability of real-world clinical practice.

High performance on internal validation does not guarantee reliable performance in real-world clinical settings.

This disconnect is often referred to as the AI validation gap. It has become one of the central barriers to trustworthy clinical AI. Closing this gap requires validating models against real-world imaging data that reflect true deployment conditions, enabling robust, fair, and continuously monitored performance.

Understanding the AI Validation Gap in Imaging

Imaging AI models are commonly developed using retrospective datasets that are carefully curated to optimize image quality by minimizing noise and variability. While these datasets are essential for early algorithm development, they rarely reflect how imaging is acquired and interpreted in routine clinical practice. In the real world, imaging data are heterogeneous by design. Differences in scanner manufacturers, reconstruction algorithms, acquisition protocols, and patient populations introduce variability that curated datasets often exclude. When models are validated only under homogeneous conditions, they risk learning dataset-specific shortcuts rather than clinically meaningful signals.

Evidence Snapshot

A multi-institutional imaging AI study evaluated pneumonia detection models across both internal and external hospital datasets. While the models achieved strong performance during internal validation (AUC up to ~0.93), performance dropped substantially when tested on data from independent institutions (AUC ~0.81). Further analysis revealed that the models had learned hospital-specific and prevalence-related signals rather than disease-specific features, limiting their ability to generalize to real-world clinical settings. (AUC- Area under the receiver operating characteristic curve). [1]

Real-World Imaging Data as the Foundation for Generalizable AI

Real-world imaging data expose AI models to the full spectrum of clinical variability they might encounter after deployment. These datasets include diverse patient populations, longitudinal follow-up, varied disease presentations, and differences in imaging hardware and protocols.

This diversity is especially critical for identifying edge cases. Rare diseases, atypical presentations, and uncommon comorbidities are frequently underrepresented in highly curated training datasets, yet failures in these scenarios can have disproportionate clinical consequences.

Evidence Snapshot

A large-scale imaging AI study demonstrated that strong overall performance metrics can mask severe failures in clinically important subgroups. In pneumothorax detection, models performed well on aggregate benchmarks but failed on cases without chest drains, a key real-world scenario. The failure occurred because the model relied on treatment-related artifacts rather than underlying pathology. These limitations were not visible during standard validation and only emerged when performance was evaluated across real-world subpopulations. [2]

Validation as a Continuous Process, Not a One-Time Milestone

Clinical environments are dynamic. Imaging protocols evolve, scanners are upgraded, patient populations shift, and disease prevalence changes over time. As these conditions change, the statistical properties of imaging data also shift, a phenomenon widely recognized as dataset shift. An AI model that performs well at deployment may therefore degrade as real-world conditions diverge from those seen during development.

Increasingly, regulators and health systems recognize that validation must be treated as a lifecycle process rather than a one-time milestone. Continuous performance assessment across evolving real-world imaging data is necessary to detect drift, bias, and emerging failure modes that static validation cannot capture.

Real-world imaging data provide the foundation for this continuous validation paradigm. By reflecting how imaging is actually acquired and used in routine care, these datasets enable ongoing, evidence-based monitoring of AI behavior. While clinicians play a critical role in interpreting AI outputs and identifying unexpected failures, this approach does not scale for continuous validation. Scalable validation instead depends on access to real-world imaging data that can surface performance shifts before they translate into clinical risk

Segmed: A Partner in Bridging the Validation Gap

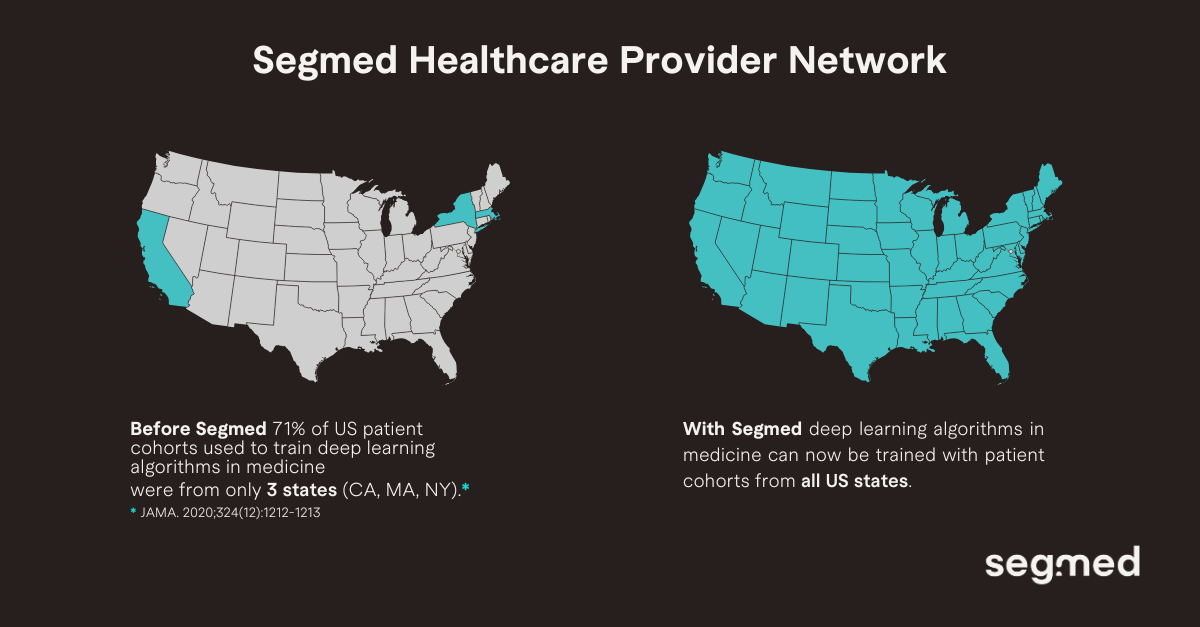

Closing the validation gap in AI requires access to geographically diverse real-world imaging data that enable rigorous and representative evaluation. Segmed is a platform created to support this need.

Historically, the development environment for medical imaging AI has been defined in the context of data sets which have been geographically proximate within a few states in the United States, generally associated with prominent academic institutions. This lack of geographic distribution hinders exposure to the challenges of real-world variation.

Segmed addresses this gap by enabling access to real-world imaging data across all regions of the United States. This geographic breadth supports more robust validation, allowing developers to identify regional performance variation and build models that generalize reliably across diverse clinical settings. Ultimately, this will enable AI validation to move beyond aggregate performance metrics toward fairer models, safer clinical use cases, and greater trust in AI within clinical practice.

Our regulatory-grade, de-identified, and annotated datasets are ideal for developing AI models across oncology, neurology, and cardiology disease areas. Segmed has been part of more than 35 FDA clearances, multiple foundation models, and fit-for-purpose real-world evidence research projects.

Connect with us to explore how our diverse, high-quality tokenized imaging datasets can enhance the training and validation of healthcare AI models.

Frequented Asked Questions - F.A.Q.

What is the AI validation gap in medical imaging?

The AI validation gap in medical imaging refers to the disconnect between strong performance achieved during model development on curated datasets and reduced or inconsistent performance in real-world clinical settings.

Why does imaging AI fail in real-world settings?

Imaging AI often fails in real-world settings because models are trained on curated, homogeneous datasets that do not reflect real-world variability in scanners, acquisition protocols, patient populations, and clinical workflows. When deployed, this mismatch leads to reduced generalizability, hidden bias, and performance degradation across diverse healthcare environments.

How does real-world data improve AI generalization?

Real-world data helps the generalization of AI because, on real-world data, the models are exposed to the complete diversity of clinical practice: variations in imaging equipment, acquisition protocols, patient demographics, and even disease presentation. Training and validating AI on this heterogeneous data reduces bias, improves robustness, and ensures more reliable performance when these models are deployed in real-world healthcare settings.

What role does real-world imaging data play in continuous AI validation?

The performance of AI models may change with time owing to changing clinical practices, dataset drift, and changes in the population. Real-world imaging data support the concept of continuous validation through the ability to monitor model performance in scenarios that reflect real-world settings.

How does Segmed support real-world AI validation in medical imaging?

Segmed supports real-world AI validation by providing large-scale, diverse, and regulatory-grade imaging datasets that reflect real-world variability in scanners, protocols, geographies, and patient populations. Its standardized curation, quality controls, and privacy-preserving pipelines enable robust external validation, bias detection, and generalizable performance assessment of medical imaging AI models.

References

- Zech JR, Badgeley MA, Liu M, Costa AB, Titano JJ, Oermann EK. Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: A cross-sectional study. PLoS Med. 2018 Nov 6;15(11):e1002683. doi: 10.1371/journal.pmed.1002683. PMID: 30399157; PMCID: PMC6219764.

- Oakden-Rayner L, Dunnmon J, Carneiro G, Ré C. Hidden Stratification Causes Clinically Meaningful Failures in Machine Learning for Medical Imaging. Proc ACM Conf Health Inference Learn (2020). 2020 Apr;2020:151-159. doi: 10.1145/3368555.3384468. PMID: 33196064; PMCID: PMC7665161.

- U.S. Food and Drug Administration. Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan. 2021. https://www.fda.gov/media/145022/download?attachment

- January 6, 2025; Draft Guidance: Artificial Intelligence-Enabled Device Software Functions: Lifecycle Management and Marketing Submission Recommendations. https://www.fda.gov/media/184856/download

- Finlayson Samuel G, Subbaswamy Adarsh, Singh Karandeep, John B, Annabel K, Jonathan Z, et al. The Clinician and Dataset Shift in Artificial Intelligence. New England Journal of Medicine [Internet]. 2021 [cited 2026 Jan 8];385(3):283–6. Available from: https://doi.org/10.1056/NEJMc2104626

- Varoquaux G, Cheplygina V. Machine learning for medical imaging: methodological failures and recommendations for the future. npj Digital Medicine [Internet]. 2022;5(1):48. Available from: https://doi.org/10.1038/s4174602200592y